Functions

-

Multiple Protocols

Multiple protocols are supported at Layer 4 and Layer 7, facilitating diverse application scenarios.

Multiple protocols are supported at Layer 4 and Layer 7, facilitating diverse application scenarios.

-

High Availability

A variety of measures ensure high service availability and quality.

A variety of measures ensure high service availability and quality.

-

Layer 4Layer 4

TCP and UDP are used to handle services with a large number of access requests, or those require high performance.

TCP and UDP are used to handle services with a large number of access requests, or those require high performance.

-

Layer 7Layer 7

Both HTTP and HTTPS are supported. Multiple encryption protocols and cipher suites are available for HTTPS to ensure service flexibility and security.

Both HTTP and HTTPS are supported. Multiple encryption protocols and cipher suites are available for HTTPS to ensure service flexibility and security.

-

Health CheckHealth Check

Server health status is regularly monitored and traffic is distributed only to healthy servers, ensuring service continuity.

Server health status is regularly monitored and traffic is distributed only to healthy servers, ensuring service continuity.

-

Cross-AZ Load BalancingCross-AZ Load Balancing

ELB distributes traffic across availability zones and ensures service continuity even if an availability zone fails because of a disaster.

ELB distributes traffic across availability zones and ensures service continuity even if an availability zone fails because of a disaster.

-

Sticky Sessions

Requests from a client for a particular session are forwarded to the same backend server to ensure that the client has continuous access.

Requests from a client for a particular session are forwarded to the same backend server to ensure that the client has continuous access.

-

Elastic Scaling

ELB automatically scales its request handling capacity and combines with Auto Scaling to improve service flexibility and reliability.

ELB automatically scales its request handling capacity and combines with Auto Scaling to improve service flexibility and reliability.

-

Layer 4Layer 4

Source IP addresses are used to maintain sessions at Layer 4.

Source IP addresses are used to maintain sessions at Layer 4.

-

Layer 7Layer 7

Cookies are used to maintain sessions at Layer 7.

Cookies are used to maintain sessions at Layer 7.

-

Seamless IntegrationSeamless Integration

Auto Scaling automatically adjusts the number of servers as necessary to cope with varying levels of workload.

Auto Scaling automatically adjusts the number of servers as necessary to cope with varying levels of workload.

-

Automatic ExpansionAutomatic Expansion

ELB automatically scales to the incoming traffic to ensure a positive user experience.

ELB automatically scales to the incoming traffic to ensure a positive user experience.

How ELB Works

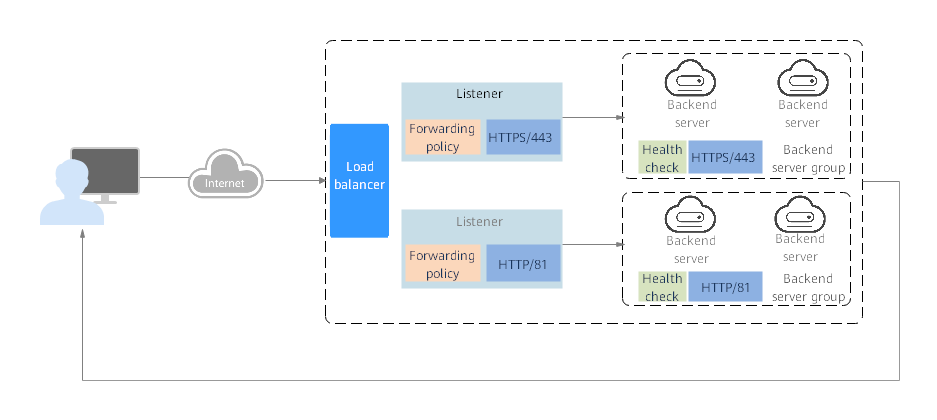

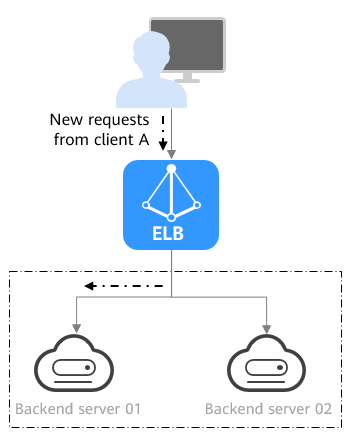

Figure 1 How ELB works

The following describes how ELB works:

1. A client sends a request to your application.

2. The listeners added to your load balancer use the protocols and ports you have configured to receive the request.

3. The listener forwards the request to the associated backend server group based on your configuration. If you have configured a forwarding policy for the listener, the listener evaluates the request based on the forwarding policy. If the request matches the forwarding policy, the listener forwards the request to the backend server group configured for the forwarding policy.

4. Healthy backend servers in the backend server group receive the request based on the load balancing algorithm and the routing rules you specify in the forwarding policy, handle the request, and return a result to the client.

How requests are routed depends on the load balancing algorithms configured for each backend server group. If the listener uses HTTP or HTTPS, how requests are routed also depends on the forwarding policies configured for the listener.

Load Balancing Algorithms

Dedicated load balancers support four load balancing algorithms: weighted round robin, weighted least connections, source IP hash, and connection ID. Shared load balancers support three load balancing algorithms: weighted round robin, weighted least connections, and source IP hash.

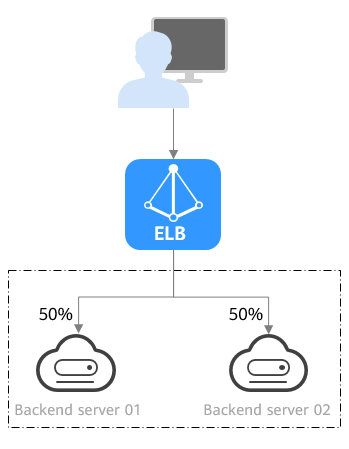

Weighted round robin: Requests are routed to backend servers using the round robin algorithm. Backend servers with higher weights receive proportionately more requests, whereas equal-weighted servers receive the same number of requests. This algorithm is often used for short connections, such as HTTP connections.

The following figure shows an example of how requests are distributed using the weighted round robin algorithm. Two backend servers are in the same AZ and have the same weight, and each server receives the same proportion of requests.

Figure 2 Traffic distribution using the weighted round robin algorithm

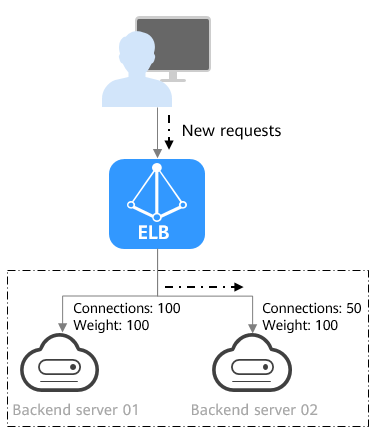

Weighted least connections: In addition to the weight assigned to each server, the number of connections being processed by each backend server is also considered. Requests are routed to the server with the lowest connections-to-weight ratio. In addition to the number of connections, each server is assigned a weight based on its capacity. Requests are routed to the server with the lowest connections-to-weight ratio. This algorithm is often used for persistent connections, such as connections to a database.

The following figure shows an example of how requests are distributed using the weighted least connections algorithm. Two backend servers are in the same AZ and have the same weight, 100 connections have been established with backend server 01, and 50 connections have been connected with backend server 02. New requests are preferentially routed to backend server 02.

Figure 3 Traffic distribution using the weighted least connections algorithm

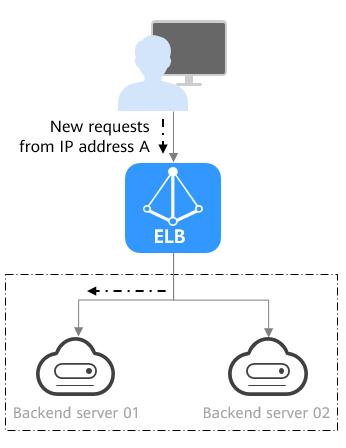

Source IP hash: The source IP address of each request is calculated using the consistent hashing algorithm to obtain a unique hashing key, and all backend servers are numbered. The generated key is used to allocate the client to a particular server. This allows requests from different clients to be routed based on source IP addresses and ensures that a client is directed to the same server that it was using previously. This algorithm works well for TCP connections of load balancers that do not use cookies.

The following figure shows an example of how requests are distributed using the source IP hash algorithm. Two backend servers are in the same AZ and have the same weight. If backend server 01 has processed a request from IP address A, the load balancer will route new requests from IP address A to backend server 01.

Figure 4 Traffic distribution using the source IP hash algorithm

Connection ID: The connection ID in the packet is calculated using the consistent hash algorithm to obtain a specific value, and backend servers are numbered. The generated value determines to which backend server the requests are routed. This allows requests with different connection IDs to be routed to different backend servers and ensures that requests with the same connection ID are routed to the same backend server. This algorithm applies to QUIC requests.

Figure 5 shows an example of how requests are distributed using the connection ID algorithm. Two backend servers are in the same AZ and have the same weight. If backend server 01 has processed a request from client A, the load balancer will route new requests from client A to backend server 01.

Figure 5 Traffic distribution using the connection ID algorithm

Factors Affecting Load Balancing

In addition to the load balancing algorithm, factors that affect load balancing generally include connection type, session stickiness, and server weights.

Assume that there are two backend servers with the same weight (not zero), the weighted least connections algorithm is selected, sticky sessions are not enabled, and 100 connections have been established with backend server 01, and 50 connections with backend server 02.

When client A wants to access backend server 01, the load balancer establishes a persistent connection with backend server 01 and continuously routes requests from client A to backend server 01 before the persistent connection is disconnected. When other clients access backend servers, the load balancer routes the requests to backend server 02 using the weighted least connects algorithm.

For details about the weighted least connections algorithm, see Load Balancing Algorithms.

If requests are not evenly routed, troubleshoot the issue by performing the operations described in How Do I Check Whether Traffic Is Evenly Distributed?

Product Advantages

Robust Performance

ELB can establish up to 100 million concurrent connections and meet your requirements for handling huge numbers of concurrent requests.

High Availability

ELB is deployed in cluster mode and ensures that your services are uninterrupted. If servers in an AZ are unhealthy, ELB automatically routes traffic to healthy servers in other AZs.

High Scalability

ELB makes sure that your applications always have enough capacity for varying levels of workloads. It works with Auto Scaling to flexibly adjust the number of servers and intelligently distribute incoming traffic across servers.

Easy to Use

A diverse set of protocols and algorithms enable you to configure traffic routing policies to suit your needs while keeping deployments simple.

Application Scenarios

High Traffic Websites

ELB intelligently distributes traffic to ensure smooth running of applications, making it a good choice for websites with an extremely large number of simultaneous requests.

Advantages

Disaster Recovery

ELB can distribute incoming traffic across availability zones, offering high service availability to enterprises like banks.

Advantages

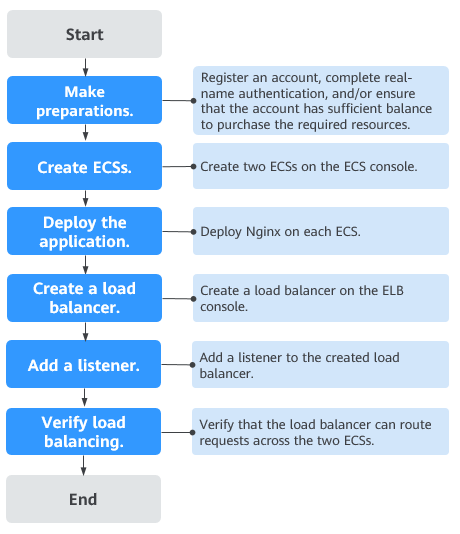

Getting Started

Figure 1 shows how you can use basic functions of ELB to route requests when you are still new to ELB, and Figure 2 shows how you can use domain name or URL-based forwarding of ELB to route requests more efficiently.

Figure 1 Getting started - entry level

Figure 2 Getting started - advanced level

Videos

Elastic Load Balance Brief Introduction

03:20

Configuring ELB

04:46

Configuring Access Logging

03:49

What Can I Do If Backend Server Is Unhealthy?

06:03